Yesterday I posted about the debate over profiling Muslims at the airport, and how Bruce Schneier persuasively argued that the concept, which seems so intuitively sensible to so many Americans, is a terrible idea even just from a security point of view. He also commented on the other, less tangible costs that such a scheme would impose, such as the alienation of Muslims from American life, and the corruption of our values.

There is one other likely negative effect of profiling that is worth mentioning.

One of the strengths of Schneier’s argument is that he focuses on the bureaucratic nature of the TSA—I don’t mean that as a pejorative, just that it is of necessity a large, rule-based organization that seeks to standardize its policies and practices. Schneier emphasizes the difficulty the TSA would have in implementing standards for the profiling of Muslims, noting that, “since there's no such thing as ‘looking Muslim’—it's a belief system, not an ethnic group—they're going to sort on something like ‘looking Arab,’ whatever that ends up meaning.”

The gross inadequacy of profiling based on looks, due to the wide variety of ethnicities within the Muslim world, or the wide variety of people who could be construed as “looking Arab”—would quickly become apparent. What would happen at that point?

Inevitably, the screening agency would start feeling pressure to go beyond looks.

“We are already using a profile, but with just a few simple data points, we can make it so much more accurate,” they will think. Perhaps they use a computer program to assess the ethnicity of a passenger’s name—first, last, or middle. Such a small step. While we’re at it, might as well check to see if a person is a naturalized citizen, or has traveled frequently to an Arab or Muslim country. Wouldn’t be that hard to check out their parents too, who might have just such a history, or a more ethnically telling name.

It might also improve the accuracy of their targeting if they could take the passenger’s address and cross-reference it against census data. After all, a person who lives in certain areas of Detroit is far more likely to be Arab or Muslim than someone who lives in, say, Albuquerque. A probability could be assigned. Although address is not part of the data given to the government under , it could be discovered relatively easily by cross-referencing other databases. Lists of past addresses could also be obtained from multiple private database companies.

And once the government starts buying data from private aggregators, all kinds of new possibilities open up. Do their former roommates have names that suggest Arab ethnicity? Is a passenger on a list of “Religious Households”? That’s currently for sale and from similar companies, which would no doubt love to have the government as a customer (and think how that would incentivize them to engage in all kinds of clever, aggressive, and intrusive new techniques for gathering data on individuals’ religion). Given the vigorous surveillance of online activity that the online marketing industry is now engaged in, they might be a good source of information on web sites visited, political interests, and so forth.

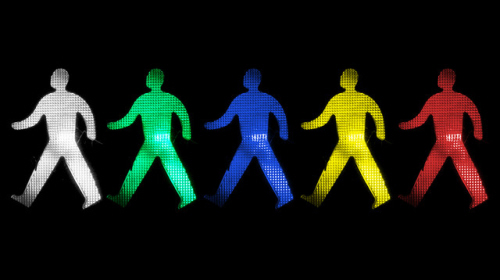

All this data and who knows what else could then be crunched by a computer algorithm and passed along to the screeners, who might get it boiled down to a simple cue—say, a green, yellow or red light for each passenger.

Privacy invasion? But we’re already doing profiling, they’ll say, we’re just trying to make it more accurate so that fewer people are affected.

At this point, a certain portion of Americans will think to themselves, “that does sound very intrusive, but sadly we need to impose that on Muslim travelers.” This simple but obvious error overlooks the simple fact that in order to identify those who belong to a targeted group, everyone will need to be subject to these government checks, evaluations, and intrusions. We’ve seen the same fallacy at work in the immigration context through identity programs such as E-Verify and .

This kind of vision is hardly the product of a paranoid imagination:

• A scheme very similar to the above, called CAPPS II, was proposed by the Bush Administration in 2002. As I discussed here, that program would have tapped into commercial data sources to perform background checks on every air passenger, and crunched that data to produce a profile of each traveler’s “risk to airline safety.” Screeners would even have gotten the red, yellow, or green light for each traveler.

• Although the plan eventually died, the basic underlying concept has been brought back from the dead in a scaled back form in the TSA’s misguided Pre-Check program.

• In addition, as I mentioned in this post last week, the Customs and Border Patrol Agency runs a secretive program called the Automated Targeting System that assigns a computer-generated “real-time rule based evaluation” risk assessment for every traveler who cross the nation’s border. We know little about how these evaluations are constructed.

So the concept of using multiple sources of data to construct computerized evaluations of passengers is already a well-established threat to our privacy. Any program that institutionalized profiling of Muslims or any other group would no doubt dovetail with that approach—and supercharge it, to the detriment of us all.

Finally, there’s one other problem with the idea of allowing profiling at our airports: once accepted there, that approach would inevitably become more acceptable in other security contexts. Unfortunately, we have seen too many efforts to push airline security techniques beyond the unique environment of aviation and into other parts of our society—for example through the silly VIPR program, in which airport-style security is set up in random public spaces in our travel infrastructure such as train stations.

Fortunately, an explicit policy of ethnic or religious profiling in U.S. airports is not likely to happen any time soon. However, the government is slowly beginning to go down the road of profiling of another sort: that based on intrusive inquiries into the facts of our lives. Which, in addition to all its other problems, may incorporate hidden or de facto profiling based on ethnicity.