Judge Allows ACLU Case Challenging Law Preventing Studies on ‘Big Data’ Discrimination to Proceed

First Amendment Lawsuit Brought on Behalf of Academic Researchers Who Fear Prosecution Under the Computer Fraud and Abuse Act

WASHINGTON — A federal judge has rejected the government’s position in a lawsuit filed by a group of university professors and journalists who argue that a federal computer crimes law unconstitutionally criminalizes research aimed at uncovering whether online algorithms result in racial, gender, or other illegal discrimination in areas such as employment and housing. The district court’s decision late Friday allowed the case to proceed, although the judge did dismiss several of the claims, removing some of the plaintiffs from the lawsuit.

The case, brought by the American Civil Liberties Union in June 2016, challenges a section of the Computer Fraud and Abuse Act (CFAA) that the government argues makes it a crime to violate a website’s terms of service. Those terms, which are arbitrarily set by individual sites and can change at any time, often prohibit things like creating multiple “tester” accounts, providing inaccurate information to websites, or using automated methods to record publicly available data like search results and ads.

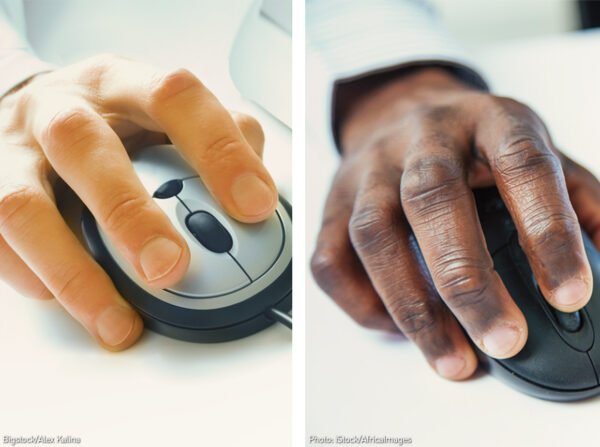

Those same practices are used by researchers to test whether sites are, for example, more likely to show to people of color or show to men who search employment listings. Studies like these necessarily require researchers to create dummy online identities and record what content is served up to those identities.

Judge John Bates, finding that the CFAA bars providing inaccurate information to websites, ruled that two plaintiffs can proceed on their claim that criminalizing such activity violates their free speech rights.

In his ruling, Judge Bates wrote that under the government’s interpretation, “the CFAA threatens to burden a great deal of expressive activity, even on publicly accessible websites—which brings the First Amendment into play.”

Judge Bates also found that despite the government’s contention, a proper reading of the CFAA would not criminalize the majority of the plaintiffs’ research activities because Congress had not intended such a result, and that the government’s reading of the law raises constitutional concerns.

The discriminatory outcomes that plaintiffs investigate are often the result of algorithms relying on “big data” analysis to classify people based on their web browsing habits or other information collected by social media platforms and data brokers — such algorithms then steer web users towards various products or services.

Equivalent studies to find discrimination offline — for example, where pairs of individuals of different races attempt to secure housing and jobs and compare outcomes — have been encouraged by Congress and the courts for decades in order to ensure that civil rights laws like the Fair Housing Act are not being broken. Such laws prohibit practices that result in discrimination, regardless of whether it is intentional.

“As a result of this law, the plaintiffs and countless other researchers and journalists have had to worry about potential prosecution whenever they conduct their vitally important research to uncover online discrimination,” said Esha Bhandari, a staff attorney with the ACLU Speech, Privacy, and Technology Project who argued the case last October. “We hope that the court’s opinion will allow such research and testing to go forward, free of the threat of federal criminal sanctions.”

The plaintiffs moving forward are Alan Mislove and Christo Wilson, associate and assistant professors of computer science at Northeastern University, respectively. They designed a study to test whether the ranking algorithms on major online hiring websites produce discriminatory results by systematically ranking specific classes of people — such as people of color or women — below others. The court’s decision permits Professors Mislove and Wilson to proceed with their claims that their research activity — which requires providing false information to websites as part of tester profiles — is protected under the First Amendment.

“The plaintiffs want nothing more than to secure their right to conduct the kinds of experiments online that have been used to identify and root out offline discrimination for decades,” said Rachel Goodman, staff attorney with the ACLU Racial Justice Program. “This decision is an important step forward in helping them expose online discrimination.”

The other academic plaintiffs were Christian Sandvig, an associate professor of information and communication studies at the University of Michigan, and Karrie Karahalios, an associate professor of computer science at the University of Illinois. They designed a study to determine whether the computer programs that determine what to display on real estate sites are discriminating against users by race or other factors.

The ACLU also represents First Look Media, which publishes The Intercept. The journalists there wish to investigate websites’ business practices and outcomes, including any discriminatory effects of big data and algorithms. The work of journalists often involves collecting public data from websites and other activities that may violate terms of service. This portion of the case was also dismissed.

In reports from and , the federal government itself has recognized the potential danger for big data algorithms to reinforce racial, gender, and other disparities.

The lawsuit, Sandvig v. Sessions, was filed in the U.S. District Court for the District of Columbia. The attorneys on the case are Bhandari and Goodman of the ACLU and Arthur B. Spitzer and Scott Michelman of the ACLU of the District of Columbia.

The ruling is here:

/legal-document/sandvig-v-sessions-opinion

More information on the case is here:

/cases/sandvig-v-sessions-challenge-cfaa-prohibition-uncovering-racial-discrimination-online