The TSA and Amtrak Police are trying out new see-through body scanners in New York City’s Penn Station that raise serious constitutional questions. And as is so often the case, the government is not being sufficiently transparent about the devices, how they will be used, on whom, and where they will eventually be deployed. We also don’t know who will have access to the information they collect or for how long.

There is also reason to believe the technology may not work as well as the TSA says it does.

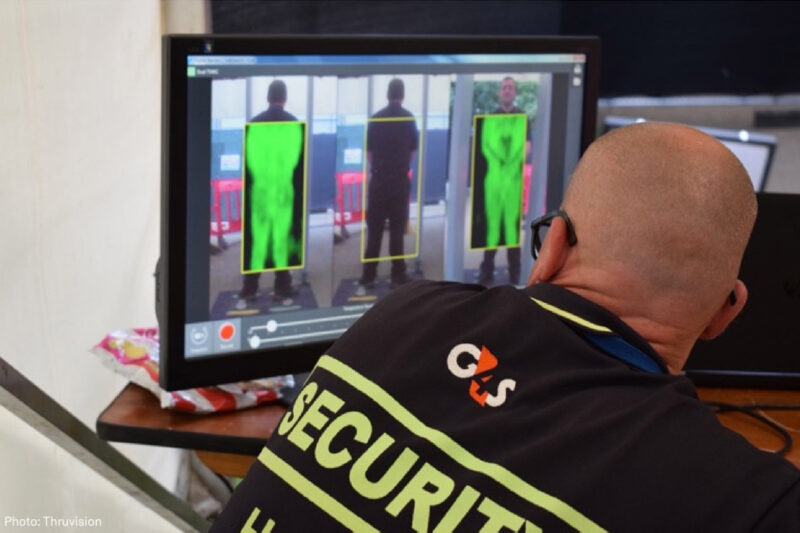

This “passive millimeter wave” technology works by detecting the heat radiating from the human body and analyzing ways in which those emanations may be being blocked by objects hidden on a person’s body. The machines do not emit x-rays or other radiation. The theory is that the operator of the technology will be able to tell if large object such as a suicide vest device is present underneath a person’s clothing. The technology uses an algorithm to determine whether there is an anomaly. The two devices in use are the created by QinetiQ, and .

There are several concerns associated with this technology. First, it is not clear whether the anticipated uses of the technology are constitutional. Under the Fourth Amendment, the government is generally not permitted to search individuals without a warrant. Body scanners may be used in airports because of an “administrative exception” to the Fourth Amendment that the courts have found is reasonable because of the unique security vulnerabilities of aircraft. But it is far from clear that the courts will permit this exception to expand to cover every crowded public place in America.

Other factors that may affect its constitutionality include whether people are able to opt out of being searched (it appears they are not), the technology’s resolution and precision, and the extent to which it detects not only true threats but other personal belongings as well, such as back braces, money belts, personal medical devices such as colostomy bags, and anything else someone might have on their person. Will an alert from this machine be sufficient to constitute justification for detaining someone, or probable cause to search them?

We don’t know enough about the capabilities of this technology to know for sure — and we don’t know how its capabilities are likely to grow in the future. If it is as coarse a detector as television images suggest, it is likely to have a very high rate of false positives — and that’s likely to make the government want to make it ever more detailed and high-resolution. If it becomes higher resolution, that means it will see all kinds of other personal effects as well. Either way, there are serious privacy problems with this technology.

In addition, until the device can be scaled up so it scans everyone who goes through a certain area, the technology can be aimed at certain people. It’s up to the operator to determine which people get scanned and which don’t. This means people are subject to a virtual stop-and-frisk, a policing tactic historically known for its on Black and Latino people.

Will officials perform this digital stop-and-frisk on a white man in a suit, or a brown man with a beard or a black teen in a hoodie? If history is any guide, we know the answer.

Once an anomaly is detected, a computer algorithm determines whether the abnormality presents a “green,” “yellow” or “red” risk level. We don’t know what happens if someone provokes a “yellow” or a “red” alert or if even some “green” alerts will still cause further scrutiny. Does a security official make a judgment call on whether to interrogate or otherwise hassle that person? If so, will they decide to scrutinize our old friends white man in a suit or brown man with a beard? And will the NYPD have access to the technology or the scans?

We also don’t know how the algorithm is determining the threat level that gets spit out. We don’t know what factors it is considering, the weight of those factors, what tradeoffs occurred when the systems was developed, or the data it was trained on to determine if the system is accurate. When algorithms are not tested for potential errors or bias, they are often found to have on certain groups, particularly people of color.

However the technology is deployed, there are very good reasons to doubt that it would be effective in spotting threats. Even in the most controlled conditions, such as airport scanners, millimeter wave technology can produce a lot of . In a crowded, bustling location full of fast-moving people, the error rate would probably be even higher. And if there is any location where people are likely to have all kinds of things on and about their bodies, it’s a busy travel hub like Penn Station. If this device is generating alarms every minute it will quickly become useless — and it could mean that many innocent people will be needlessly subject to invasive searches or lengthy interrogations.

The technology may also miss true threats. Ben Wallace, a British politician who used to work for QinetiQ, told the that the scanners would likely not pick up “the current explosive devices being used by Al Qaeda,” because of their low amounts of radiation.The Government Accountability Office and even Homeland Security itself have in the past that body scanners have a high failure rate and are easily subverted.

From media reports on the new scanners, it’s unclear how long this pilot program will last or these devices could eventually end up across the country. But their sudden rollout is another example of potentially invasive and discriminatory technologies being deployed with little or no public input or accountability.