The High-Definition, Artificially Intelligent, All-Seeing Future of Big Data Policing

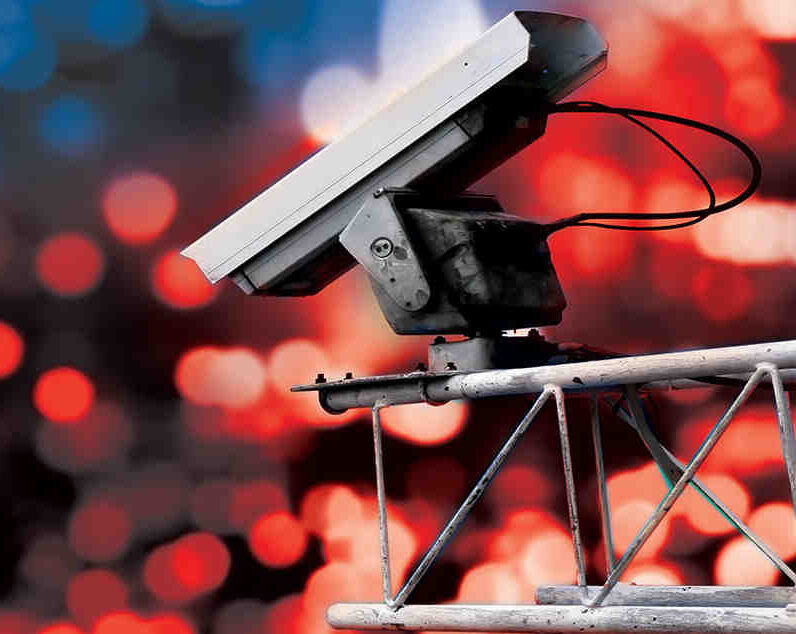

Predictive analytics and new surveillance technologies are policing, helping prioritize who police target, where they patrol, and how they investigate. But police practices will be entirely revolutionized when video surveillance cameras new artificial intelligence capabilities.

AI will soon allow for new forms of tracking and monitoring in ways that could reshape the balance of power between police and citizens. This is not meant to sow fears about sentient computers or sensationalize the threat of new technology. However, we should all recognize that advances in the aggregation of data about citizens in public will empower the watchers at the expense of the watched.

AI-enhanced video surveillance technology already exists, most disconcertingly in China. The Chinese government its citizens using high-definition cameras equipped with facial recognition capabilities, demonstrating in real time the capacity to link hundreds of millions of cameras with the capability to identify hundreds of millions of citizens. If you in China, video can capture it, and your photo might be displayed — in shame — on a public digital billboard.

China is the laboratory of the , and future test subjects should be frightened. If you try to hide from police in a big city in China, as a BBC journalist for a story, you can be found in just minutes. In areas where the Chinese government wants to stifle political or religious dissent, police monitor movement and with a series of cameras, smartphone scanners, and facial recognition technologies.

Three main innovations are fueling this AI-video revolution. First, camera technology is getting cheaper, and its capabilities are . Cameras now come equipped with 360 degree fields of view, night vision, and high-definition capabilities, which expand the scope of vision to more and more places. Second, are getting more sophisticated as more images are uploaded and the ability to automatically match images to people and each other improves. Finally, the ability to use artificial intelligence to find objects, patterns, or faces in the unstructured data of video footage will allow for new forms of individual targeting within a system of mass .

China is the laboratory of the , and future test subjects should be frightened.

With access to such technology, police may be able to receive automated alerts for images that capture pre-programmed patterns of activity they deem suspicious, such as hand-to-hand transactions or loitering. Or police video surveillance for every time a particular symbol — a gang sign or Nike swoosh — shows up on the cameras.

The technologies are not limited to China. Some are even being piloted in America.

While not as sophisticated, searchable enhanced video surveillance has been tested at the . The technology allows campus police the ability to read the lettering on a single sweatshirt from among tens of thousands of students at a college football game. It also automatically alerts campus security to a person loitering in a parking lot.

East Orange, New Jersey, piloted a video that automatically directs police to patterns of criminal activity pre-programmed into a system of “suspicious movements,” which might signify a drug deal or robbery. And Axon, a leading provider of police body cameras, is already using and sort through the hours of police footage captured by the Los Angeles Police Department.

The technology exists today to train machines to spot suspicious activities, and it’s getting cheaper and more common. Cities could buy these surveillance capabilities tomorrow. And, if history is any guide, this technology will first be deployed to poor communities, immigrant communities, and communities of color — politically powerless places that have traditionally found themselves subject to new forms of social control and surveillance. Worse, if built off existing patterns of suspicion, the datasets might replicate societal or structural biases.

So, are there any legal or constitutional limitations that might prevent this future of artificially intelligence mass surveillance in America?

The short answer is not really, not yet.

A handful of local cities have passed to regulate police technology. A few states have identified as a problem warranting extra legislative protection. Congress, however, has not acted. And, while one might hope that the Fourth Amendment’s privacy protections might check mass surveillance capabilities, the constitutional law is in flux and the answers uncertain.

If history is any guide, this technology will first be deployed to poor communities, immigrant communities, and communities of color.

Independent of the law, one might hope that our cultural sensibility — our shared American belief in privacy, liberty, and the freedom of association — might curtail the temptations of all-seeing surveillance. Clearly, the idea of being tracked and identified through machine learning raises profound concerns about fundamental freedoms.

But those cultural norms are already being eroded, without any significant protest or loud complaint. Right now, in lower Manhattan, 9,000 linked cameras feed directly into a central command center via the New York Police Department’s . The surveillance system enables police officers to watch the denizens of New York in real time. Right now, in many cities, scan the streets recording the location of every car in public and creating a massive searchable dataset. Right now, police body cameras record the streets, potentially creating another .

A shift to smarter cameras and smarter AI search capabilities may not seem so threatening as we slowly acclimate to these forms of mass surveillance. But they are a threat, and they will be more so when each of those technologies can be linked together and mined through AI.

So, what is to be done?

Organizations like the ACLU, , the , the , , , and other have begun raising awareness of how surveillance technologies threaten liberty and distort privacy. They have been amplified by groundbreaking journalism. But this conversation must become a loud and vigorous national debate. Large-scale artificially intelligent surveillance systems are not in America yet, and if citizens internalize their rather chilling capabilities, they might not become part of our architecture of mass surveillance.

Efforts by local governments seem, thus far, to offer the most promising check on growing surveillance powers. In Seattle, Washington, and Santa Clara County, California, in Oakland and around Boston, local communities have demanded more transparency and accountability from police about their use of technology. But they are just a fraction of jurisdictions that will need to act.

Rein In Surveillance In Your Community

Community Control Over Police Surveillance (CCOPS)

Source: American Civil Liberties Union

In my book, “,” I propose holding annual “” to local accountability to new policing technologies. These summits would bring together government officials, police, community members, technologies, lawyers, academics, and other interested parties to do a quasi-risk assessment on the privacy risks of all new surveillance technologies.

Finally, tech companies can help. They are the driving force for technological change, and they can provide privacy solutions. Artificial intelligence can be programed to identify faces, but it can also be programmed to not identify faces unless certain parameters are met. Patterns of crimes can be automatically flagged, but activities protected by the Constitution can be equally automatically ignored. Maybe we want cameras that can spot machine guns in public, but not ones that flag protest signs or protesters.

Right now, in lower Manhattan, 9,000 linked cameras feed directly into a central command center via the New York Police Department’s .

Community voices need to be in the room at the design stage of these technologies. Tech companies should open the door to academics, lawyers, and civil society to ask hard questions before creating invasive technologies. The mentality that drives tech to build now and ask questions later, which has been so successful for disruptive innovation, does not always work when constitutional rights are at stake.

Hundreds of millions of AI-enhanced surveillance cameras are being stocked in overseas warehouses right now, getting consistently cheaper and easier to access. You don’t need a predictive algorithm to see the future of predictive policing, but you might need a law to regulate it. At a minimum, you need a national conversation to give citizens a say in this next-generation civil rights fight.

Will Artificial Intelligence Make Us Less Free?

Artificial intelligence is playing a growing role in our lives, in private and public spheres, in ways large and small.

Source: American Civil Liberties Union

AI Engineers Must Open Their Designs to Democratic Control

Already, technology and automation are reinforcing and exacerbating social injustice in the name of accuracy, speed, and economic progress.

Source: American Civil Liberties Union