Hey Clearview, Your Misleading PR Campaign Doesn’t Make Your Face Surveillance Product Any Less Dystopian

In the last few weeks, a company called Clearview has been for marketing a reckless and invasive facial recognition tool to law enforcement. The company claims the tool can identify people in billions of photos nearly instantaneously. And Exhibit A in support of their claim to law enforcement that their app is accurate? An “” that Clearview boasts was modeled on the ACLU’s work calling attention to the dangers of face surveillance technology.

Imitation may be the sincerest form of flattery, but this is flattery we can do without. If Clearview is so desperate to begin salvaging its reputation, it should stop manufacturing endorsements and start deleting the billions of photos that make up its database, switch off its servers, and get out of the surveillance business altogether.

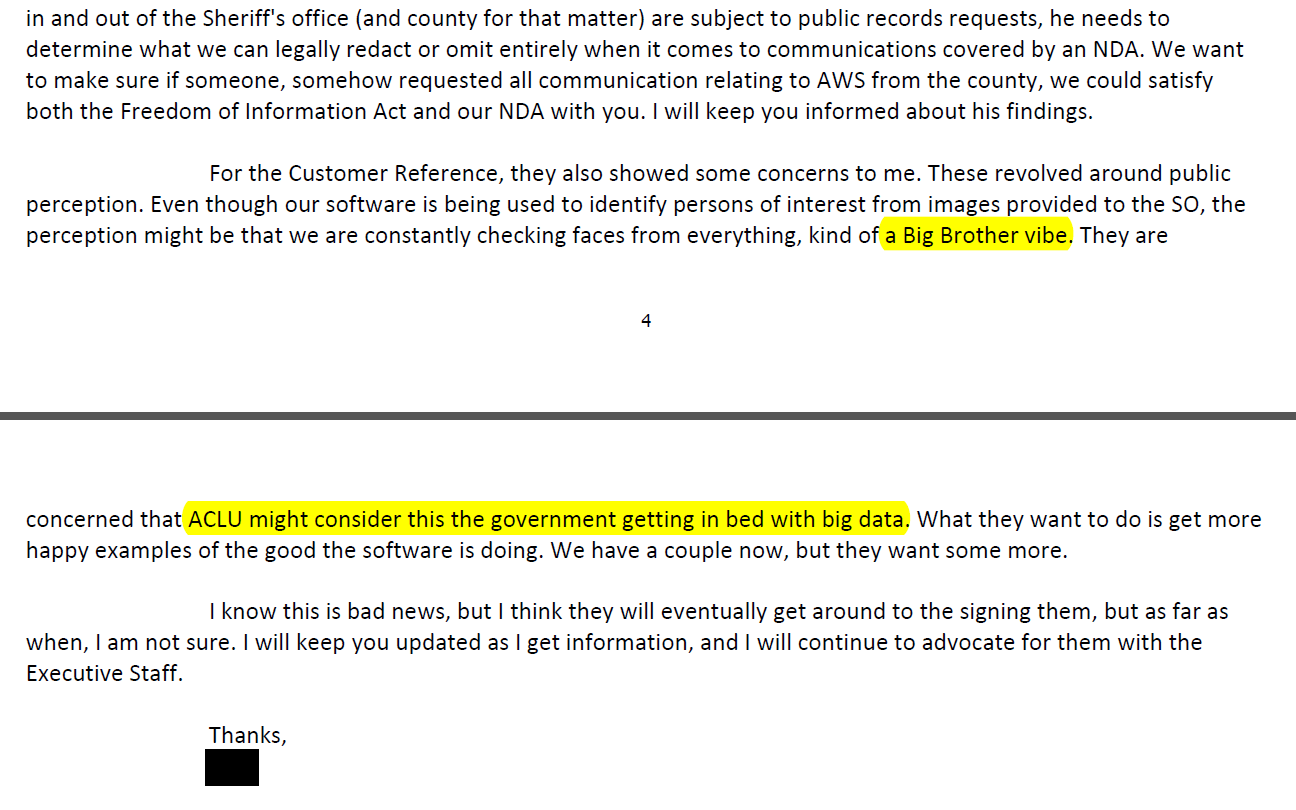

Clearview’s failed attempt to use the ACLU’s work to support its product exposes a larger industry truth: Companies can no longer deny the civil rights harms of face surveillance in their sales pitch. This harkens back to when we obtained emails between Amazon and the Sheriff’s office in Washington County, Oregon expressing the valid concern that the “.”

How did all of this start? In May of 2018, the ACLU released the results of an investigation showing that Amazon was selling face surveillance technology to law enforcement. Then, in a test the ACLU conducted in July 2018 how law enforcement were using the technology in the field, Amazon’s Rekognition software incorrectly matched 28 members of Congress, identifying them as other people who have been arrested for a crime. The false matches were disproportionately of people of color, including six members of the Congressional Black Caucus, among them civil rights legend Rep. John Lewis. The results of our test were not new. Numerous academic studies prior to that have found demographic differences in the accuracy of facial recognition technology.

Following our work, members of Congress raised the alarm and numerous government agencies over the civil rights implications of facial recognition technology. Cities nationwide are government use of the technology as part of ACLU-led efforts . In Michigan and New York, activists are fighting to prevent the face surveillance of Black communities, , and . And last year, California blocked the use of biometric surveillance with police body cameras.

There is a groundswell of opposition to face surveillance technology in the hands of government. And despite all that, Clearview insists on amassing a database of millions of photos, using those photos to develop a shockingly dangerous surveillance tool, and selling that tool without restriction to law enforcement inside the U.S. and even throughout the world.

Clearview's technology gives government the unprecedented power to spy on us wherever we go — tracking our faces at protests, AA meetings, political rallies, churches, and more.

For the record, Clearview’s test couldn’t be more different from the ACLU’s work, and leaves crucial questions unanswered. Rather than searching for lawmakers against a database of arrest photos, Clearview apparently searched its own shadily-assembled database of photos. Clearview claims that images of the lawmakers were present in the company's massive repository of face scans. But what happens when police search for a person whose photo isn't in the database? How often will the system return a false match? Are the rates of error worse for people of color?

Further, as we pointed out when we released the findings of our test, an algorithm’s accuracy is likely to be even worse in the real world, where photo quality, lighting, user bias, and other factors are at play. Simulated tests do not account for this.

There is also no indication that Clearview has ever submitted to rigorous testing. In fact, the of this technology that have been done, including by the National Institute of Standards and Technology and the by Joy Buolamwini and Timnit Gebru, have shown that facial analysis technology has serious problems with faces of women and people with darker skin.

Rigorous testing means more than attempting to replicate our test, and protecting rights means more than accuracy. Clearview's technology gives government the unprecedented power to spy on us wherever we go — tracking our faces at protests, AA meetings, political rallies, churches, and more. Accurate or not, Clearview's technology in law enforcement hands will end privacy as we know it.

Despite all this, Clearview somehow has the gall to create the impression that the ACLU might endorse their dangerous and untested surveillance product. Well, we have a message for Clearview: Under. No. Circumstances.

If Clearview is so confident about its technology, it should subject its product to rigorous independent testing in real-life settings. And it should give the public the right to decide whether the government is permitted to use its product at all.

We stand with the over 85 racial justice, faith, and civil, human, and immigrants’ rights organizations, over 400 , , , the cities of San Francisco, Oakland, Berkeley, Somerville, Cambridge, and Brookline, and hundreds of thousands of people who have called for an end to powerful facial recognition technology in the hands of government.