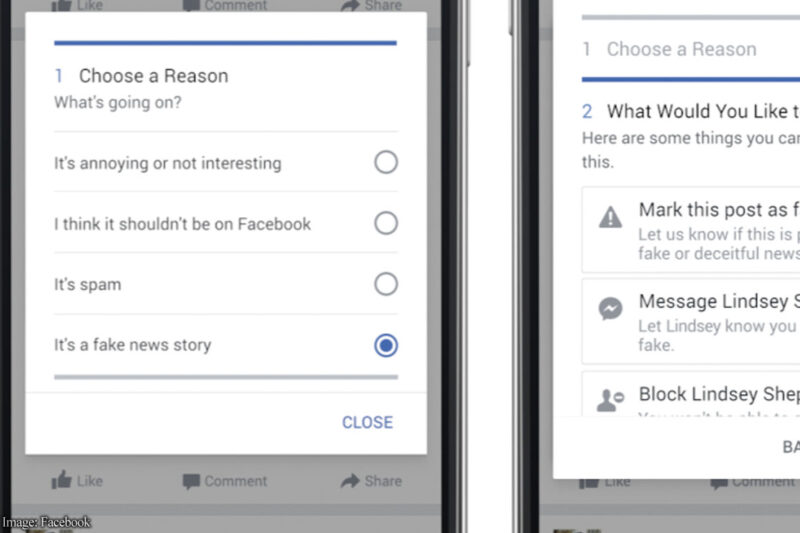

Facebook yesterday announced that it was testing steps to stem the flow of “fake news” through its platform. This was announced in an online , and company executives also gave us at the ACLU a quick briefing. Under the new policy, postings that are flagged by users as false will be referred to a “third-party fact checking organization” such as , and if that third party decides the piece is false, Facebook will put a small banner on it saying, “Disputed by Snopes,” or whatever 3rd party has checked it, with a link to an explanatory piece by that 3rd party on why it is regarded as false.

As we wrote about earlier this week, we do not think Facebook should set itself up as an arbiter of truth. While we still have questions, of all the proposals that have been publicly discussed since the election first sparked widespread focus on the problem of fake news, this may be the best, most carefully crafted approach for the company to take. It is an approach based on combatting bad speech with more speech. Instead of squelching or censoring stories, Facebook includes more information with posts, telling people, in effect, that “this party here says this material shouldn’t be trusted.” That does not create the censorship concerns that more heavy-handed approaches might take. We applaud Facebook for responding to the pressure it is under on this issue with a thoughtful, largely pro-speech approach.

That said, some questions and concerns do remain about those details. Crowdsourcing has proven to be a very useful and successful model for many forms of information-sifting online, but it can also be problematic, mainly because of the risk of a “heckler’s veto,” in which people who do not like a post gang up to mark it as “false” to suppress the point of view it represents. At the ACLU we have received many complaints from people whose posts have been removed because political opponents have falsely flagged it as “offensive” or otherwise violating Facebook’s terms of service. Indeed, it’s happened to us! Here Facebook is seeking to avert that problem by referring flagged pieces for manual determinations by the 3rd party fact checkers.

Facebook indicated that posts that are flagged will be downgraded by “The Algorithm,” which the company uses to decide which of the many posts by our Friends will actually appear in our newsfeed. That means that Facebook is, in fact, effectively endorsing those fact checkers in a formal way. The executives we spoke with indicated that The Algorithm would not downgrade stories that have received a lot of fake news flags but not yet been reviewed by a fact checker. We were glad to hear that, because otherwise there would be no protection against the heckler’s veto.

One issue we are not clear on is what relationship Facebook will have with these 3rd party fact-checking organizations—whether it will pay them, or simply rely upon those organizations’ self-interest in attracting the traffic that an analysis of a trending news item, and consequent link from Facebook, will bring. Snopes, for example, is advertising-supported, and so would have an interest in drawing traffic from controversies over questionable viral news pieces.

Perhaps the biggest question is what the boundaries will be for how this system is applied. As I discussed in my prior post, the question of what is fact and what is fiction is a morass that is often impossible to neutrally or objectively determine. Armies of philosophers working for over two thousand years have been unable to come up with a satisfactory answer to the question of how to distinguish the two. And there is an enormous amount of material out there fitting every gradation between the most egregious hoax and the merely mistaken and badly argued. What if a piece is largely true, but includes a single intentional, consequential lie?

Facebook’s answer is that it is, for now at least, focusing its efforts on “the worst of the worst, on the clear hoaxes spread by spammers for their own gain.” From what we were told, it also sounds like whatever algorithm they use to refer stories to the 3rd party fact checkers will not only incorporate the number of fake news flags received from users, but also focus on pieces that are actually trending.

That may be all they are able to do, because this system is not very scalable. Facebook says it will only flag a story if one of the fact-checking organizations has determined it’s false, and has produced a written explanation as to why. That is a very labor-intensive process, one that presumably cannot be applied beyond a few of the most widely circulating pieces.

It’s inevitable that the company will quickly be thrown into controversies over particular pieces and whether or not they should be flagged. To cite just one possible example, would a piece denying the reality of climate change count? No matter where the company sets the bar for what pieces they refer to the fact checkers, they will be met by persistent criticism for not flagging all the stories that are just below that bar.

When Facebook tells users that Snopes has declared a piece as false, that is not going to go far for those who are part of a political movement that, as I argued in my prior post, has extremely robust intellectual defenses against factual material that challenges its political beliefs. Facebook will likely find it impossible to both enable fact-checking, and to be seen as neutral by those who reject those facts and any organizations that validate them. That said, this new attempt to fight fake news will no doubt give pause to at least some posters and re-posters of “clear hoaxes spread by spammers for their own gain,” and dampen the spread of such material by naïve, non-politically motivated users. That still leaves a lot of room for non-mercenary political propaganda that includes widespread falsehoods.

We will be very interested in following the details of how this new approach is implemented.