What Lurks Behind All That Immigration Data?

The United States has a long history of using cutting-edge technology to collect and analyze data on immigrants. Unfortunately, we have an equally long history of misusing that data to justify nativist and exclusionary policies.

More than 100 years after the initial use of electric tabulating machines to track immigrant populations, the methods and technologies used to collect immigrant data have changed dramatically. The danger of misuse, however, remains. As we evaluate new government proposals to deploy artificial intelligence for “extreme vetting” programs, we would do well to keep history in mind and take care to ensure that the technology is effective and the analysis it generates is accurate before we allow it to inform immigration policy.

Some of the earliest statistical analysis tools to quantify immigrant populations arose in conjunction with late 19th-century . Starting in 1870, under the direction of , the Census Office in the Bureau of Statistics began taking steps to introduce electric tabulating machines, with a simultaneous push to collect more information about immigrants. Early , invented by future IBM-founder Herman Hollerith, could cross-tabulate data much more efficiently than hand-counting, enabling the Census Office to collect sophisticated information on first- and second-generation immigrants by country of birth and foreign parentage.

Walker used the data tabulated with Hollerith’s machines in 1890 to bolster the theory of “race suicide” — the idea that native-born Americans would have fewer children as more immigrants entered the workforce — and to promote severe immigration restrictions on those “beaten men from beaten races[,] representing the worst failures in the struggle for existence.” Other statisticians would use the data to suggest that could be determined by race. Economists and statisticians in the early 20th century exploited this census data to provide for race-based immigration restriction.

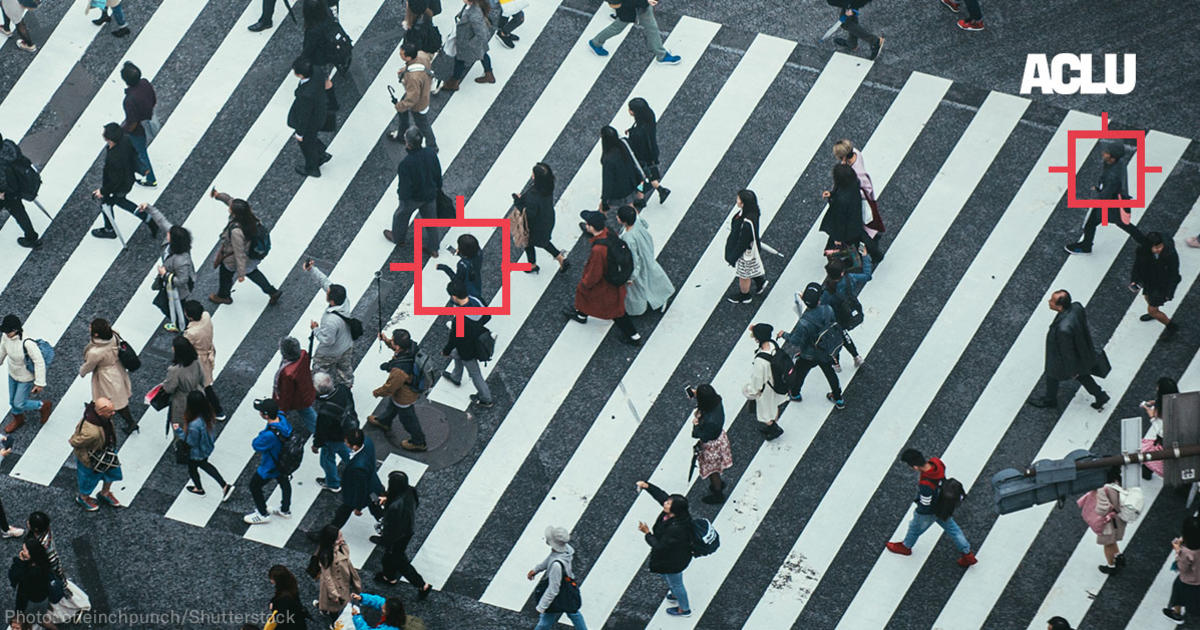

A little over a century later, the Department of Homeland Security has embarked on even more ambitious projects cataloguing immigrant data. DHS is developing technology not only to vastly increase collection of information, but also to perform automated analysis and make decisions on whom to admit into our country.

The benefits of social media analysis for threat detection are questionable at best.

Reports suggest that DHS may be employing tools that ostensibly analyze tone and to try to identify national security threats from . The benefits of social media analysis for threat detection are . Even human analysts can misunderstand plain English when come in to play; automated tools can dramatically magnify such misunderstandings.

Researchers have found, for instance, that common natural language processing tools often African-American vernacular English as English. Such tools also raise significant privacy concerns. As , broad social media monitoring would almost certainly involve collecting vast quantities of information on Americans, including on their professional networks, political and religious values, and intimate relationships.Automated analyses of languages other than English pose even greater challenges. Most commercially available natural language processing tools can only reliably process text in certain “ languages” — languages that have a large body of resources available to train tech solutions for language interpretation — like English, Spanish, and Chinese (as opposed to, for instance, Punjabi or Swahili).

Applying tools trained on high-resource languages to analyze text in other languages will certainly divorce words from meaning. Consider the who posted “good morning” to his Facebook account. When Facebook’s automated translation tool mistranslated his caption into “attack them,” he was arrested by Israeli authorities and questioned for hours.

Social media information, along with biometric data and other details collected from a wide variety of government and commercial databases, may ultimately get fed into DHS’s — an overarching system for evaluating international travelers to generate an “automated threat rating,” supposedly correlated to the likelihood that a person is engaged in illegal activity. Aspects of the system are from ordinary disclosure requirements — individuals can’t see their rating, know which data are used to create it, or challenge the assessment — and it’s applied to every person who crosses the border, regardless of citizenship status. We do know that the ATS system pulls information from state and federal law enforcement databases as well as airline-travel database entries (called Passenger Name Records, or PNR).

One who managed to get access to some of the data DHS retained on him found that it identified the book he was reading on one trip across the border. Other collected from travel database entries can reveal even more sensitive information, such as special meal requests, which can indicate an individual’s religion, and even the type of bed requested in a hotel, which could speak to sensitive relationship details. Any broader efforts to collect additional biographic or social media information may feed into the system as well.

For all of our advances in data science and technology, there is still no way for any an individual’s terrorism risk...

At present, ATS is used to flag individuals for further human scrutiny when they travel into or out of the country or when their visas expire. But suggest that automated tools may soon be used to make final decisions about people’s lives.

An Immigration and Customs Enforcement call for software companies to bid on the creation of an automated vetting system — to scrutinize people abroad seeking U.S. visas as well as foreigners already in the country — came to light in . In keeping with the goals outlined in President Trump’s Muslim ban, the request for proposals called for software capable of evaluating an individual’s propensity to commit crime or terrorism or to “contribute to the national interest.” The algorithm is meant to make automated predictions regarding who should get in and who should get to stay in the country, by evaluating open source data of dubious quality using a hidden formula insulated from public review.

For all of our advances in data science and technology, there is still no way for any an individual’s terrorism risk or future criminality. Instead, these tools are likely to rely on “proxies,” ultimately making value-based judgements using criteria unrelated to terrorism or criminality.

We’ve seen examples of this in policing contexts. Social media monitoring software companies their products to law enforcement by touting their ability to monitor protesters online by tracking people using terms like “#blacklivesmatter,” “#ImUnarmed,” and “#PoliceBrutality.” An investigation by the into the Boston Police Department’s use of Geofeedia software found that the tool was used to monitor the entire Boston Muslim community by tracking common Arabic words and treating them as suspicious.

In the context of an administration that has repeatedly announced intentions to restrict immigration based on , , and , it is not hard to imagine what proxies might be used for propensity to commit crime or for one’s ability to contribute to the national welfare. In the context of America’s history of cloaking nativist and racist policies in pseudo-scientific language, we need to be vigilant in ensuring that the use of new technology doesn’t subvert our highest values.

This piece is part of a series exploring the impacts of artificial intelligence on civil liberties. The views expressed here do not necessarily reflect the views or positions of the ACLU.

Will Artificial Intelligence Make Us Less Free?

Artificial intelligence is playing a growing role in our lives, in private and public spheres, in ways large and small.

Source: American Civil Liberties Union

The High-Definition, Artificially Intelligent, All-Seeing Future of Big Data Policing

Predictive analytics and new surveillance technologies are already transforming policing, helping prioritize who police target, where they patrol, and

Source: American Civil Liberties Union